Overview

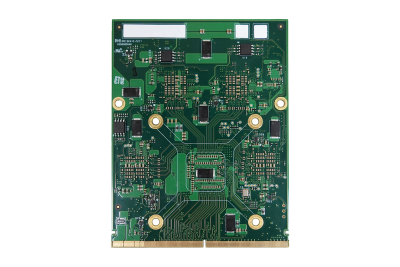

AI-MXM-H84A features the first-ever MXM 3.1 Type B module powered by four Hailo-8™ AI inference processors, providing up to 104TOPS AI performance with high power efficiency and multitasking to speed up deployment of neural network (NN) and deep learning (DL) processes on edge devices.

Because of size, weight and power(SWaP) reduction, the AI accelerator can be easily integrated into a variety of embedded systems to handle heavy inference workloads with low latency. For example, AI-MXM-H84 is widely applied to automatic guided vehicles (AGV), virtual fencing system, autonomous machines and computer vision systems.

Users can shorten the AI projects development cycles via comprehensive software package such as AI development tools and customization services, including industrial-grade mechanism design, fine-tuning for AI inference models integration.